Coping with snapshot tests on different machines architecture.

Marcin Gorny

January 24, 2025

At Paramount, we use snapshot tests to ensure that the visual layer of our applications is properly tested. Snapshot tests check if the next iterations of the application didn’t break anything in existing views. This is achieved by rendering pieces of code and comparing this render with the predefined reference image. If images are identical, the test passes; otherwise, it fails.

Unfortunately, the rendering outcome depends on the architecture of the machine on which we run tests. Images are identical to the human eye, but the image comparator detects even the slightest change. In our project, we have plenty of rounded corners, image blurs, and gradients that are differently rendered on Intel-Mac vs ARM-Mac, and our CI infrastructure still relies on some Intel-based Macs.

To solve this problem, the author of the snapshot tests SDK introduced two similar parameters that can be specified:

- Precision

- Perceptual precision

Their usage is quite different: Precision measures the percentage of identical pixels between the newly created image and the reference image. Perceptual precision checks the most significant deviation of color for any pixel, which means it focuses on color difference. Perceptual precision is perfect for gradient-based views – ARM-based Macs render them slightly different than Intel-based ones. The default value for both is 100%. If just one of the parameters succeeds in comparison, the whole test is marked as successful. That’s why if we don’t specify perceptual precision (as we currently don’t have it specified in our codebase), only the overall number of identical pixels is checked.

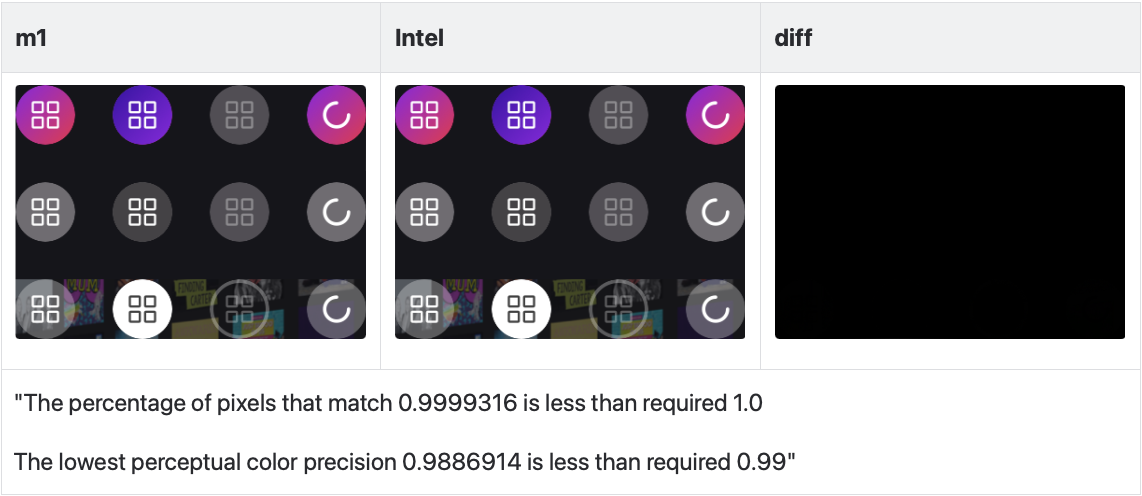

Here are a few examples of failing tests: 1). Let’s check how the snapshot test performs for images with gradient and rounded corners:

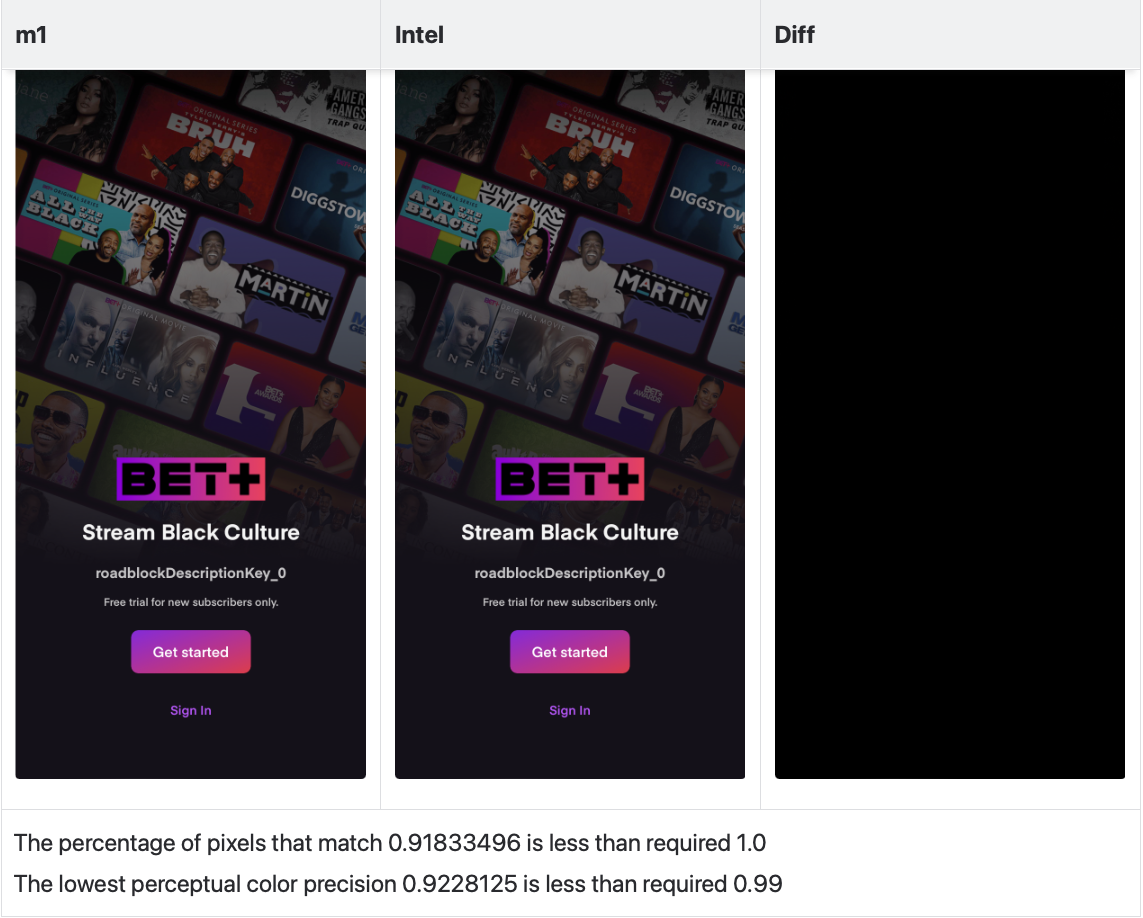

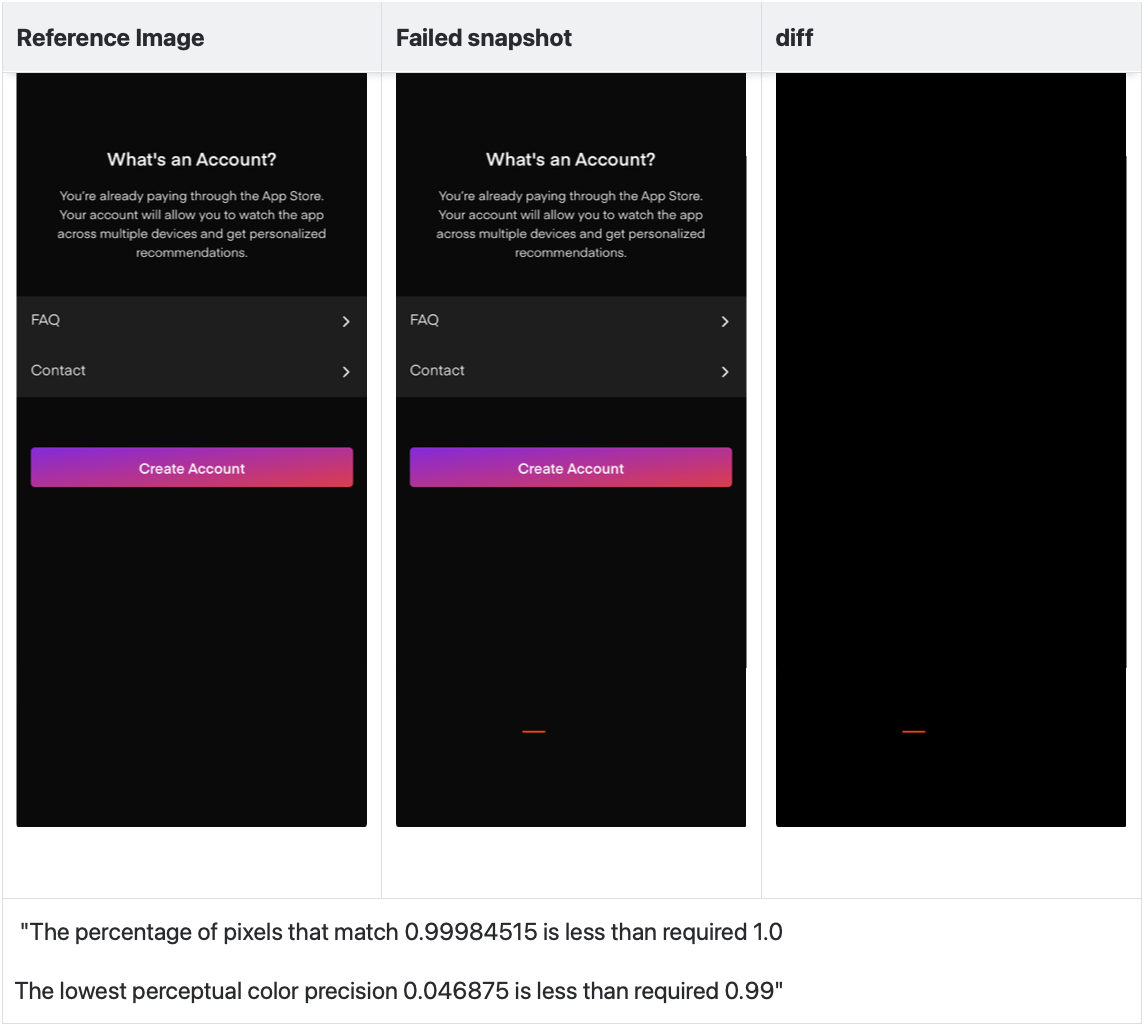

Most of the pixels are identical 99%, but it is actually ~98% for the color differences. This is not a big difference to the human eye. Here is an example of a login screen for the BET+ application where we have a blurry background:

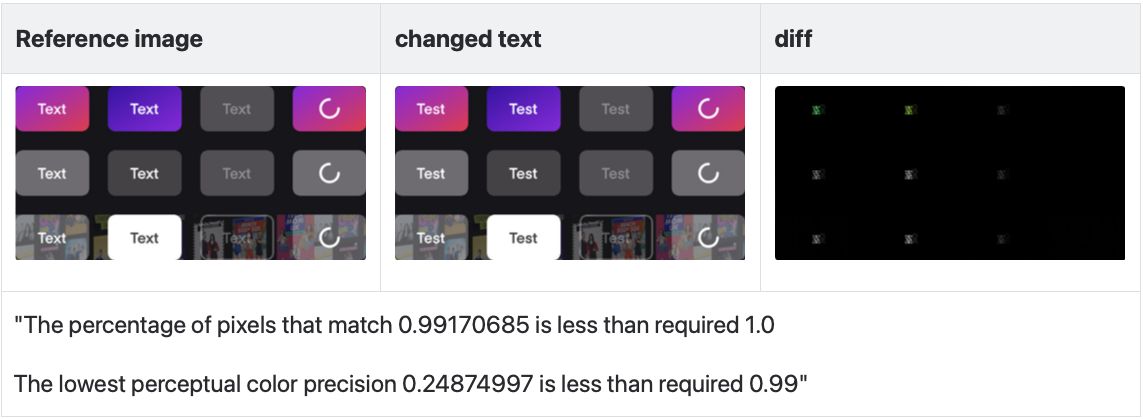

The most significant color difference for a single pixel in the example above is around 8%, but to the human eye, the difference is not visible at all. 2). For the second test, let’s use a similar image but with slightly different text: This test text was changed from expected “Text” to “Test”. Here are the key results:

As you can see above, the overall percentage of pixels that are identical in color to the reference is over 99%. However, since the text is different on the reference image, the perceptual precision dropped to 24%. This change is visible to the human eye, and well-written snapshot tests should fail.

3). For the 3rd test, let’s check if snapshot testing detects tiny visual artifacts.

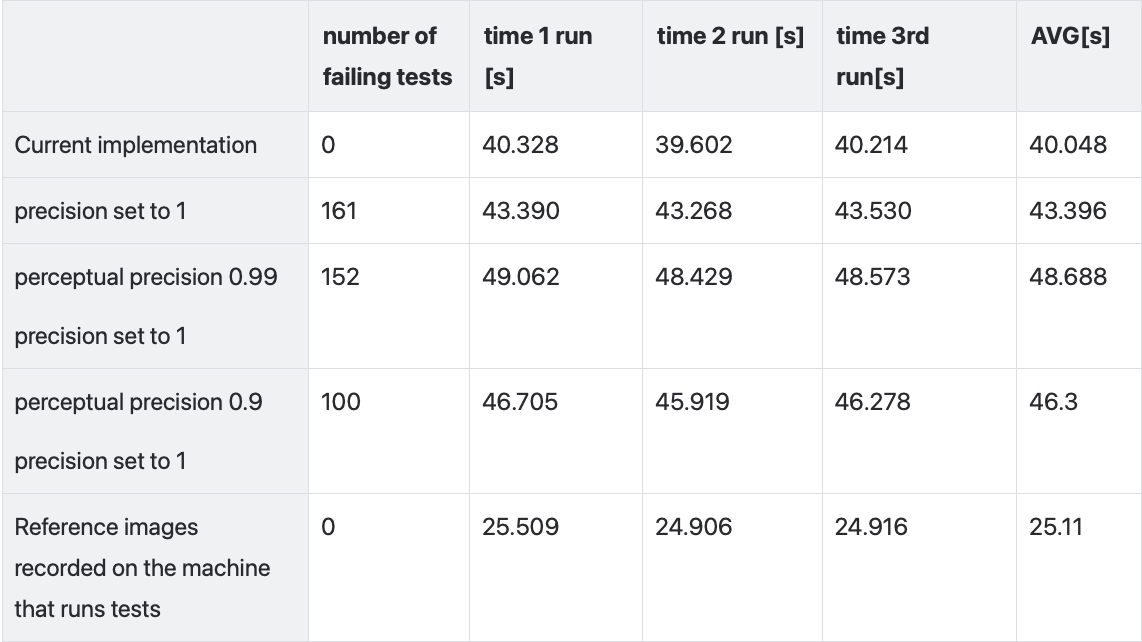

Are there any downsides to perceptual precision? Running a test suite takes significantly longer than regular snapshot tests. Below is a comparison table:

Conclusion: Until all our build machines have the same processor architecture, we should use Perceptual Precision when necessary. This ensures test reliability, which is our top priority.