Voice assistant apps through the eyes of a developer.

Przemysław Latoch

July 13, 2022

Backstory

At Paramount there are some interesting projects that you can be assigned to. I have been lucky to join a team which is supposed to deliver voice apps for our products. It’s not something that you usually hear about when you watch tech talks or read tech blogs. It’s something exotic! What we do is mainly create apps for Alexa (called skills) but we also have some experience with Google Assistant (these are called actions). In this article, I would like to bring you closer to my day-to-day job and show you how you can start your own experience with voice products.

Where to Start

Let’s start with choosing a platform. Although both seem to work similarly, I would recommend Alexa as it seems to have good documentation, community, and more SDKs to choose from. It also has many functionalities when it comes to devices with screens.

All you have to do to start your journey is open Alexa Developer Console in your browser, create an account and proceed with creating your first skill.

Basics

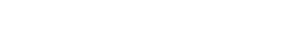

There are many options to choose from. Firstly, you can use predefined skill models, but the most convenient one is custom skill as it provides most flexibility.

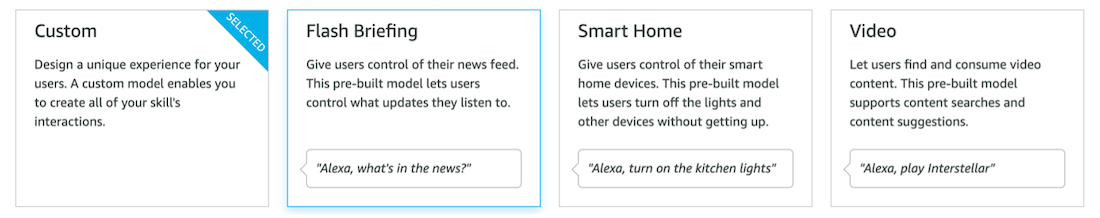

Next you have to choose which method to host your backend. It can be either your own backend deployed wherever you want, or you can use native Alexa solution, but you will be limited to Node.js and Python. When supplying your own backend, you have more options to choose from.

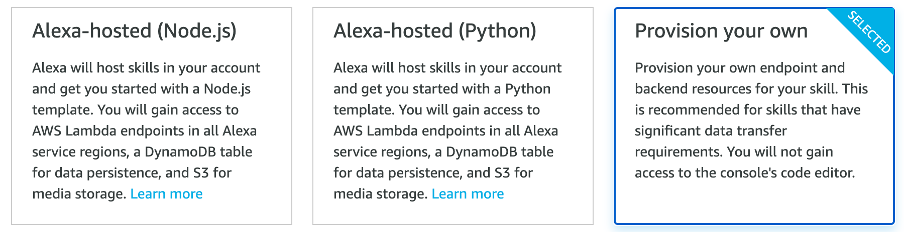

Next, you should pick a template you want to use. You can also start from scratch if none of the templates suit your needs.

When it’s all done you will see your skill site.

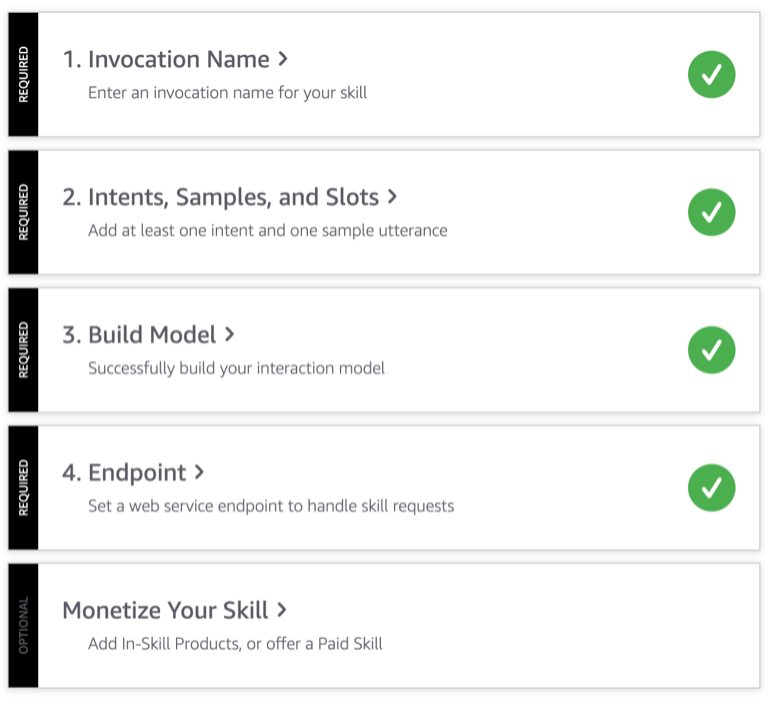

In here you will be able to set the invocation name of your skill – the name that you and your users will use to launch

your application. Intents and samples are words that your skill will understand. Once it hears those words, your skill

will send a proper request to your backend so that you will be able to process it the way that you want.

Lastly you must set an endpoint You will only have to provide one, which will be used to communicate through Alexa Amazon Cloud.

After all of those changes, you have to build the model using the Build Model button to update your skill.

How it works

Voice recognition is done by Amazon. Words that can be understood must be added in your skill definition mentioned in previous part of this post. Every intent has samples, which are words that when spoken by a user are translated into proper intent, and then sent in a request to your skill. Your backend has to provide a RequestHandler which will determine how to handle each Intent. The most basic sample of such Handler is provided in Alexa Docs.

public class HelloWorldIntentHandler implements RequestHandler {

@Override

public boolean canHandle(HandlerInput input) {

return input.matches(Predicates.intentName("HelloWorldIntent"));

}

@Override

public Optional<Response> handle(HandlerInput input) {

String speechText = "Hello world";

return input.getResponseBuilder()

.withSpeech(speechText)

.withSimpleCard("HelloWorld", speechText)

.build();

}

}

As a response you can provide either text that will be spoken by Alexa or your own mp3 or mp4 file. For devices with displays there is a possibility to supply images and more complex templates as well. For more details look for APL in the docs.

That was the simplest flow of Alexa Skills. But wait a minute. When you have a running application, you probably would like to test it, wouldn’t you?

There are at least two options to do that. First is provided by Amazon. You just have to go to the developer site, select

your skill and then open the Test tab. In here you can type what you would like to say to a device in real life.

A response will be returned. Both audio and in a form of a JSON file. This method has some limitations mainly when testing

display devices. Nothing will be rendered on your screen, so you won’t be able to check it properly.

The second option, which I use the most, is to run my service locally and use tools such as ngrok to create a tunnel

and expose my local traffic under a public endpoint. You just have to set that endpoint in an Endpoint part of your

Skill Developer Console. As simple as that!

What next?

Amazon has created well-maintained documentation which you can find here. Based on your needs you will find proper resources. Amazon also makes sure to add new functionalities on a regular basis, so make sure to follow their newsletter if you want to be up-to-date!

Summary

If you want to try something new, automate your home or just be a part of a fast-growing market, voice apps are for you. Just pick a platform and have fun!